I’m working on a presentation on Privacy by Design (PbD) for the IAPP Atlanta KnowledgeNet chapter in September. The purpose of my presentation is to do a deep dive into “Embedding Privacy into Design”, one of the seven PbD principles. While I find many practitioners may have a high level understanding of PbD, that understanding breaks down when it actually comes to building or engineering a system. The problem is that most practitioners have a small toolset in both controls for privacy and identifying privacy invasions. I’m hoping to educate privacy professionals, though my talk and this blog, on other tools they can have at their disposal.

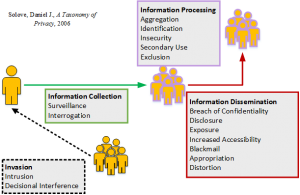

In preparing for my KnowledgeNet presentation, I started working on an example that would demonstrate an unconventional privacy invasion along with non-standard tools to address it. I say unconventional because most practitioners, especially in the U.S., become hyper focused on the identity theft, financial crimes and health related issues owing to our sectorial laws. And, similarly, I say non-standard tools, because most technical tools revolve around keeping information confidential (i.e. encryption) and non-technical tools focus on the Fair Information Practice Principles (FIPPs). The example I choose to create for my talk is “Sea-bnb” a sharing economy type service that allows yacht owners to rent their yachts to others when they aren’t using them. In  my analysis, I focus on the privacy invasion of Exclusion, an issue of Information Processing under the Solove Taxonomy of Privacy. In researching the issue for the presentation, I found out that Airbnb recently hired former U.S. Attorney General Eric Holder to deal with issues of discrimination at that service. I wrote this post for them (and others) to learn how embedding privacy controls in your system design can mitigate issues of discrimination.

my analysis, I focus on the privacy invasion of Exclusion, an issue of Information Processing under the Solove Taxonomy of Privacy. In researching the issue for the presentation, I found out that Airbnb recently hired former U.S. Attorney General Eric Holder to deal with issues of discrimination at that service. I wrote this post for them (and others) to learn how embedding privacy controls in your system design can mitigate issues of discrimination.

Exclusion is the privacy invasion of failing to let an individual know about the data one has about them or allowing them to participate in it’s use. It’s a form of Kafkaesque nightmare. Egregious examples include the TSA no-fly list and mortgage brokers using social media for determining credit-worthiness prior to sending marketing solicitations to those deemed worthy.

In diagramming a privacy invasion, one determines the invasion, the invading party, the interceding party, and finally what controls the interceding party can use to mitigate the invasion.

For our purposes, the individual is the Lessee, the invading party is the Lessor, the invasion is Exclusion and the interceding party is Airbnb. Now we have to investigate which controls are available to Airbnb. Controls come in several forms: policy controls, technical point controls and architectural controls. Given that Airbnb is an operating entity with an existing architecture, they can’t really take advantage of architectural controls without drastically altering their business model, something they probably aren’t willing to do at this point. Adopting a Craigslist style anonymous forum for posting available rentals is just not in the picture, though an upstart could certainly challenge Airbnb with a more privacy focused service which was built from the ground up with specific architectural designs around privacy.

This leaves us with policy controls and technical point controls. What follows is by no means an exhaustive list but merely suggestive list of controls. We’ll start with Policy controls

Policy Controls

A policy control is, as the name implies, an adopted and perhaps enforced policy which mitigates the privacy invasion. Policies can be derived from laws and regulations, third party requirements, industry standard or even a corporate ethos. A policy is only as successful, though, as the adoption and enforcement. Generally, policies are fairly high level which are following by specific requirements to enable that policy and then finally by specific implementations in the given system. Below is an example:

| Policy Source | Control | Implementation Methods |

| Law & Regulation – Section 3604(c) of the Federal Fair Housing Act | Don’t allow Lessors to post advertisements with discriminatory preferences in the listing | 1) Inform Lessors when creating their property profiles that they are not allowed to post discriminatory preferences |

| 2) Use automated scans to look for discriminating terms in property listings |

For each policy source, there could be dozens of specific controls and for each control there could be dozens of implementation methods. In general, the sources, controls and implementation method should be application/technology agnostic. It is only once we get to the point of applying the implementation methods to the application that we would determine whether that particular method was useful or not given our system.

Technical Point Controls

While some implementation methods for policy controls may be technical (i.e. encryption as a requirement under PCI-DSS), technical point controls prevent privacy invasions in ways that may not be directly implicated in a specific policy source or control. For instance, in the PCI-DSS world, there is no control requirement for Tokenization of card numbers, but that technical control will go a long way to mitigating potential for cardholder data breaches. In my analysis for my example, I identify a number of technical point controls to apply to the Sea-bnb example which are equally applicable to Airbnb. I present them below:

Obfuscation – Masking

Obfuscation is the intentional hiding of the meaning of information. It includes masking, tokenization, randomization, noise and hashing. Masking, the partial or complete hiding of information from display in a particular view, is a mitigating control for the Exclusion privacy invasion. Notice we aren’t talking about a specific data element, form field, or medium of information flow but rather the conveyance of ethnicity as information, regardless of form. It could be transferred to the Lessor in many ways. There could be an explicit field asking for ethnic identification, it could be conveyed through a picture of the Lessee, an audio or video introduction, their name could convey it, a free-form textual description of them, or even the words they choose when writing an introduction could be suggestive of particular ethnicities. Masking could be applied to all of these. An ethnicity field could be displayed to the Lessor as ******, masking the underlying answer. The Lessee’s name could be displayed as initials. Instead of Muhammed Abdulla, the name could be shown as “M. A.” A free form textual description could be scrubbed of ethnic implications (either directly striking a reference to “Mexican-American” or indirectly “Hola Amigo!”).

Data Minimization

Data minimization is the reduction in the collection, storage, processing or disclosure of data. One of the best defenses against information privacy invasions is not to collect data, pure and simple. Certainly, in Airbnb’s case, their might not be any reason to collect ethnicity directly (or perhaps to show compliance with anti-discrimination laws you need to), so foregoing an ethnicity field might be an option. But sometimes you need some information that may also, inadvertently convey, information you don’t want. An example here would be, as mentioned above, a picture. Maybe Airbnb wants an image to provide to Lessor so they know who to look for when the Lessee arrives. There are other ways to prove rights of access, but that would be a business decision to be weighed.

Data Minimization – Granulation

Granulation is the reduction of specify of data. For instance, if a beer website wants to know someone is over 21 before providing them information on their product, they usually ask for birth date. But that is much more privacy invasive (an Interrogation privacy invasion) than just asking if they are over 21. Perhaps they believe that asking for specific birth dates is somehow more likely to be accurate than just asking to put in their age, though I would question such over-reliance. Granulation, in our context, would suggest that we reduce the granularity of the information collected. Perhaps, instead of 20 different ethnicities, we reduce it to 3. Again, we aren’t assuming anything about the specific system (I don’t think Airbnb collects ethnicity), but merely showing that granulation is a valid technical point control to be given to engineers to decide where to use it appropriately.

Data Minimization – Compression

Compression is the reduction in fidelity of data, eliminating distinctness. Compression of certain data types could mitigate an Exclusion invasion because it could eliminate the distinctness between ethnicities. Consider an audio file where compression reduces fidelity in the audio. One could compress it to the point that it one could still understand any spoken words, but not be able to distinguish certain vocal markers which might be indicative of certain ethnicities.

Data Minimization – De-identification

De-identification is the removal of identifying data elements. Removing a person name or even chosen pseudonym will reduce the likelihood of a Lessor researching the individual, such as through Facebook or Google. By removing this option, in reduces the chance the Lessor will determine ethnicity indirectly about the individual. Care must be taken though that not only is specific identifiable data removed for de-identification, but also data that could be combined to re-identify people. As shown years ago, somewhere between 63% and 87% of individuals in the U.S. can be uniquely identified by gender, date of birth and zip code.

Data Minimization – Aggregation

Aggregation is the combination of data from multiple data subjects. Supposing there are certain data elements which we allow Lessors to discriminate on, they could be present in a form that aggregates potential Lessees. For instance, Airbnb could perform background checks on users and allow Lessor to only select individuals with completed background checks or no felony convictions. So instead of showing a specific profile that has a checkmark and allowing the Lessor to choose on an individual basis whether to allow them to rent, they could be given grouping options.

Security – Access Management

Security and access control is something almost everyone is familiar with. Access management is about giving people access to certain information or features based on their need to know to perform an action. Here is where restrictions based on time could come into play. For instance, Lessors might not be given pictures of the Lessees until after they have chosen to rent to them. It satisfies the need to identify the Lessee when they arrive to pick up a key, but doesn’t give the Lessor the discriminate at the point of determining whether to rent to them.

Security – Audit and Testing

Airbnb may want to do some self-testing to determine if (1) Lessors are discriminating and (2) Airbnb is somehow leaking information about Lessee ethnicity, subtly. Statistical analysis and review of the data could provide guidance here or introduction of similar but ethnically different Lessee could be used for testing purpose. I remember various new stories on tests run against corporate recruiters in the past which would send equally qualified candidates with only different names, one distinctly ethnic, only to see the non-ethnic candidate get a call for an interview.

Once the available controls have been identified, the next step is to actually pick controls. Below is an example of how controls might be situated together. This is by no means suggestive and the actual controls chosen will be up to Airbnb or any company in a similar situation. In any case, the decision should be based on business needs, issues of legitimacy and proportionally of the processing to the risk to the individuals.

| Control | Status | Details |

| Notify Lessor not to discriminate | Used | Notification displayed at time of posting rental. |

| Don’t collect ethnicity directly | X | Collecting for auditing and testing purpose but will be not be shown to Lessors. |

| Don’t collect ethnicity indirectly (photos, video, audio) | X | Collecting but not showing pictures until after Lessor has accepted. |

| Masking | Used | Directly collected ethnicity field will not be shown to Lessor. |

| Granulation | X | Not relevant to the specific data elements collected. |

| Compression | X | Not relevant to the specific data elements collected |

| De-identification | X | Not necessary because we’re only showing aggregate data to Lessor. |

| Aggregation | Used | Lessees can only choose eligible renters based on allowed criteria and not select individuals. |

| Access Management | Used | Lessor can’t display individual profiles in advance of allowing rental. |

| Auditing and Testing | Used | Data analysis will be performed to identify Lessors who are discriminating. |

A note on the Fair Information Practice Principles

Originally formulated in the 1970s in response to U.S. government databases, the Fair Information Practice Principles are ingrained into every privacy professional’s head. They form the basis for most international laws and regulations as well and privacy frameworks such as GAPP. It is what most practitioners turn to in order to mitigate privacy risks. Provide notice in the form of a privacy statement on your website, give the data subjects a few choices, let them see the information you have and make sure its secure, and you’re all set, right? Wrong. FIPPs provides an inadequate set of controls in the modern era. Notice fails because of asymmetric information between data subject and controller. Choice is often illusory and is subject to asymmetric power in the relationship. Full access is rare and participation is minimal and can have high transaction costs relative to benefit. The fallback is security because it is reasonably objective. Let’s see where FIPPs would get us when applied to the Exclusion issue identified above.

Awareness/Notice

Airbnb could (and probably does in their privacy policy) disclose that personal information of Lessee will be shared with Lessors. Technically this does mitigate the Exclusion risk. Remember, Exclusion is the “failure to let data subject know about data that others have about her and participate in its handling and use.” Once you let the data subject know what data others have, you’ve solved half the problem (participation is dealt with below), correct? Well no. You have to ask whether the disclosure is meaningful. Was the disclosure buried in a privacy policy that no one reads? Even if the disclosure were obvious given the context and use of the system, which is probably is with Airbnb, does the  user appreciate, in that instance, that their picture or name conveys ethnicity? Finally, even if the disclosure were explicit and obvious, does the data subject understand the ramifications? Some hyper-sensitive users who have been discriminated against in the past certainly will, but perhaps not everybody. Most people suffer from the cognitive bias of hyperbolic discounting where future costs are discounted against present benefit, here the benefit of using the system against the cost of being discriminated against.

user appreciate, in that instance, that their picture or name conveys ethnicity? Finally, even if the disclosure were explicit and obvious, does the data subject understand the ramifications? Some hyper-sensitive users who have been discriminated against in the past certainly will, but perhaps not everybody. Most people suffer from the cognitive bias of hyperbolic discounting where future costs are discounted against present benefit, here the benefit of using the system against the cost of being discriminated against.

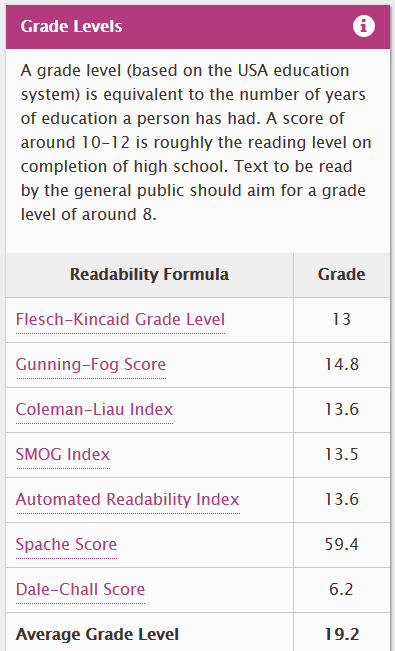

Notably Airbnb’s privacy policy is 6285 words, takes two clicks to get to from the home page and has an average grade level of 19.2 (Flesch-Kincaid of 13) meaning it takes a college level, and potentially graduate level education, to read and understand it. “Text to be read by the general public should aim for a grade level of around 8.” In 2012, the median length for the privacy policies of the top 75 websites was only about 2500 words.

Choice/Consent

Airbnb could choose to given users the option of not including identifying information (pictures, names, etc.) However, if this is done while others are given the option, those that choose to maintain their privacy will be viewed with suspicion vis-à-vis the others that have revealed their identity. If you’re hiding something, you must have something to hide, right? The net effect is the ethnic minority individual who chooses not to disclose their ethnicity (directly or indirectly) could be discriminated against regardless.

Access/Participation

Though one could take an expansive view of “participate” in the use of data about you, access and participation is usually limited to viewing information and ability to correct that information if it is factually inaccurate. If you consider the history of FIPPs, it was devised to deal with government databases. The idea was government didn’t want to adversely impact you because of bad data (deny you benefits, arrest you, etc.), so you should have a right to see what they are using to make their determination and correct that information, if necessary. You don’t, however, get a right to be more participatory in the use. So Airbnb, could provide the right to access any data (which likely you provided in the first place) and ensure the accuracy of the data. While this, in combination with Notice and Awareness above, would eliminate the Exclusion invasion, the underlying discrimination could remain. It now becomes an issue of Secondary Use, under the Solove Taxonomy. The user supplied the information (picture, for instance) for one purpose, but not for the purpose of allowing them to be discriminated against (the secondary use of the information). To eliminate this, Airbnb would need to solicit consent for this use, not a likely or very customer friendly scenario.

o I consent to the use of my picture to discriminate against me based on my ethnicity.

Integrity/Security

Except in the case of Airbnb restricting access to data conveying ethnicity until post rental decision, we’re mostly considering the integrity aspect of information security. Airbnb wants to make sure that its systems aren’t altered, purposefully or accidently, affecting the accuracy of the information. However, this doesn’t in any form affect the Lessor’s ability to discriminate and doesn’t mitigate the Exclusion issue.

Enforcement/Redress

Enforcement refers to the enforcement of the other 4 FIPPs principles, so we’re not discussing enforcement of a policy of non-discrimination. An argument could be made that implied consent to disclose identifying information is based on that information not being used to discriminate. Therefore, enforcement of the consent principle requires enforcement of the parameters upon which consent was granted. Redress would allow individuals feeling discriminated against to file a complaint. Practically, however, proving discrimination after the fact is hard.

Side note: Airbnb seems to have overlooked soliciting feedback via their privacy policy (i.e. they didn’t supply an email address in their privacy policy).

Conclusion

While my analysis here is by no means exhaustive, it should give readers a look into a privacy analysis and how this could be applied in a real world situation. Privacy practitioners often are restricted to looking for confidentiality issues and then only applying a limited set of controls. They must be given a broader brush and be able to influence system design. This is imperative to the idea of Privacy by Design.