Recently I had the opportunity to work with not one but two start-ups in the AI space on their privacy by design strategies. Luckily for the reader, both agreed to allow me to publish my analysis. While both are using AI in the back end, they are going after completely distinct markets and present very different privacy risks.

The first company, Mr Young is a Canadian start-up aiming to help people with their mental well being by directing them to appropriate resources and providing them in-app tools to improve anxiety, stress and quality of life. The second, Eventus AI, uses AI to help sales professionals cull prospects from people they meet at an event, such as a conference.

For both companies, I proceeded with my standard analysis by asking a seemingly innocuous question: is the application beneficial to the affected population? But that immediately leads to two more questions:

- Who is the affected population?

- What is the application?

-

Once that is done, and the benefit analysis is completed, we can proceed to “design” their services for privacy. This took the form of five essential elements:

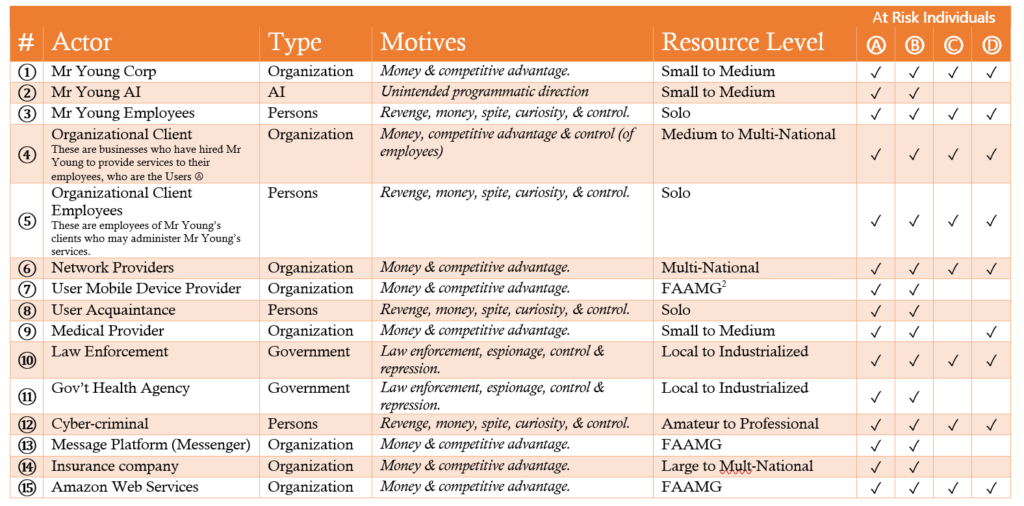

(1) Identify the threat actors

(2) Build a privacy model

(3) Determine what information is ‘necessary’ to provide the service

(4) Determine privacy risks posed by the threat actors

(5) Suggest controls to eliminate or reduce risks

I’m purposefully keeping this blog post relatively short, because I invite you to read the reports for each company themselves (which are quite lengthy). You can download them here.

Mr Young privacy design report

Eventus AI privacy design report

If you want to learn more about the technique and thought process that went into the reports, I invite you to check out my book Strategic Privacy by Design.